In an era where artificial intelligence (AI) is advancing at an unprecedented pace, criminal defense attorneys and prosecutors face a formidable challenge: the rise of deepfakes as potential evidence in legal proceedings. This analysis examines the complex issues surrounding AI-generated evidence, highlighting the technological breakthroughs, evolving legal standards, and ethical dilemmas that are transforming the dynamics of the courtroom.

The Deepfake Revolution: A Double-Edged Sword

Deepfake technology, a portmanteau of "deep learning" and "fake," has evolved at an alarming pace. Leveraging advanced AI and machine learning algorithms, deepfakes can create highly realistic yet entirely fabricated media that is often indistinguishable from genuine content. Improved AI algorithms, greater computational power, and abundant training data have driven this leap in realism [1].

The introduction of Generative Adversarial Networks (GANs) has been pivotal in this evolution, allowing for complex and lifelike manipulations that can fool even the most discerning eyes. Perhaps most concerning is the ability to manipulate video and audio in real-time, enabling individuals to appear as someone else during live video calls or streams—a capability that could be exploited to create fake alibis or incriminating evidence [1].

The Democratization of Deception

As deepfake technology becomes more accessible through open-source projects and user-friendly applications, the barrier to entry for creating convincing fakes has significantly lowered. This democratization raises serious concerns about the potential misuse of deepfakes in fabricating evidence, particularly in criminal cases where the stakes are incredibly high [2].

Consider the following scenarios:

- A deepfake video showing a defendant at a crime scene they never visited.

- An AI-generated audio recording of a suspect confessing to a crime they didn't commit.

- A manipulated piece of digital evidence that creates a false alibi for a guilty party.

These are not mere hypotheticals but represent real possibilities that criminal defense attorneys and prosecutors must now contend with in their pursuit of justice.

Legal Frameworks: Playing Catch-Up with Technology

Legal frameworks are struggling to keep pace with the rapid advancement of deepfake technology. Current rules, such as the Federal Rules of Evidence (FRE) in the United States, provide a foundation for authenticating evidence but fall short when dealing with the complexities of AI-generated content [3].

Under FRE 901, evidence must be authenticated before admission, typically by showing that it is what its proponent claims it to be. However, traditional methods of authentication may be insufficient when dealing with deepfakes, which can mimic real people and events with uncanny accuracy [3].

Amendments to FRE 901 propose addressing these challenges by requiring not only accuracy but also "validity" and "reliability" for AI-generated evidence. A proposed new Rule 901(c) would specifically address deepfakes, requiring that their probative value outweighs their potential prejudicial impact if their authenticity is challenged [3].

The Authentication Arms Race

The deepfake defense in criminal cases introduces a significant challenge to the justice system, as it opens the door for parties to question the authenticity of nearly all evidence presented at trial. This tactic can overwhelm the legal process, shifting the burden onto prosecutors and defense attorneys to authenticate every piece of digital evidence thoroughly. The resulting strain on time, resources, and expert analysis could slow proceedings and complicate the pursuit of justice, highlighting the urgent need for robust protocols to address these challenges effectively.

As deepfake creators improve their techniques, detection systems must evolve to keep pace. This ongoing battle requires significant investment in research and development of new detection technologies. Legal professionals must now rely on expert witnesses and advanced forensic techniques to authenticate evidence, which can be both time-consuming and costly [4].

Ethical Implications and the Pursuit of Justice

The use of deepfakes in criminal cases raises profound ethical concerns. The potential for AI-generated evidence to mislead juries and influence legal outcomes necessitates higher standards for evidence authentication [5]. Moreover, the "black box" nature of AI algorithms complicates the process of proving fault or understanding how damage occurred [6].

Criminal defense attorneys and prosecutors alike must grapple with several ethical considerations:

- Erosion of Trust: Deepfakes can severely undermine trust in digital evidence, potentially leading to the dismissal of genuine evidence as fake—a phenomenon known as the "liar's dividend" [7, 8].

- Fair Trial Concerns: Deepfake evidence complicates the pursuit of fair trials, potentially leading to wrongful convictions or acquittals.

- Privacy and Consent: Deepfakes often involve the unauthorized use of an individual's likeness, raising ethical concerns about privacy and consent, particularly in criminal proceedings.

- Potential for Misuse: The technology can be used for extortion, blackmail, or to manipulate public perception, all of which have serious legal and ethical ramifications.

Case Studies: When Deepfakes Enter the Courtroom

Real-world cases have already demonstrated the potential impact of deepfakes on legal proceedings:

- In 2019, a CEO was deceived into transferring $243,000 after receiving a phone call from someone using AI-generated audio to mimic the voice of his company's parent CEO [9]. This case highlights the potential for deepfakes to be used in sophisticated fraud schemes.

- In a 2020 family law case, a mother produced a manipulated audio recording to falsely suggest that the father had made threats towards her. The tampering was discovered by examining the metadata of the original recording [10].

- In March 2021, a mother was arrested for allegedly using deepfake videos to harass her daughter’s cheerleading rivals, but prosecutors later admitted they couldn't prove the videos were falsified or created by her, highlighting the challenges law enforcement faces in analyzing digital evidence [7].

These cases underscore the urgent need for legal professionals to develop strategies to detect and counteract fabricated evidence.

Strategies for Legal Professionals

To navigate the challenges posed by deepfakes, criminal defense attorneys and prosecutors should consider the following strategies:

- Invest in Training: Stay informed about the latest developments in deepfake technology and detection methods.

- Collaborate with Experts: Work closely with digital forensics experts and technologists to authenticate digital evidence.

- Implement Rigorous Authentication Protocols: Develop and adhere to strict guidelines for verifying the authenticity of digital evidence.

- Advocate for Legal Reform: Push for updated laws and regulations that specifically address the challenges of AI-generated evidence.

- Educate the Court: Be prepared to explain the complexities of deepfake technology to judges and juries.

- Scrutinize Chain of Custody: Pay close attention to the provenance of digital evidence, documenting every step of its handling and analysis.

- Consider Ethical Implications: Always weigh the ethical considerations of using or challenging deepfake evidence in criminal proceedings.

Conclusion: Adapting to a New Reality

As deepfake technology continues to advance, the legal system must adopt innovative approaches to manage AI-generated evidence effectively. Criminal defense attorneys and prosecutors stand at the forefront of this technological challenge, charged with preserving the integrity of justice in the face of digital manipulation.

By staying informed, partnering with experts, and championing comprehensive legal reforms, legal professionals can address the deepfake dilemma head-on. The future of criminal justice relies on our ability to adapt swiftly and decisively, ensuring truth and justice remain uncompromised amid increasingly sophisticated digital deceptions.

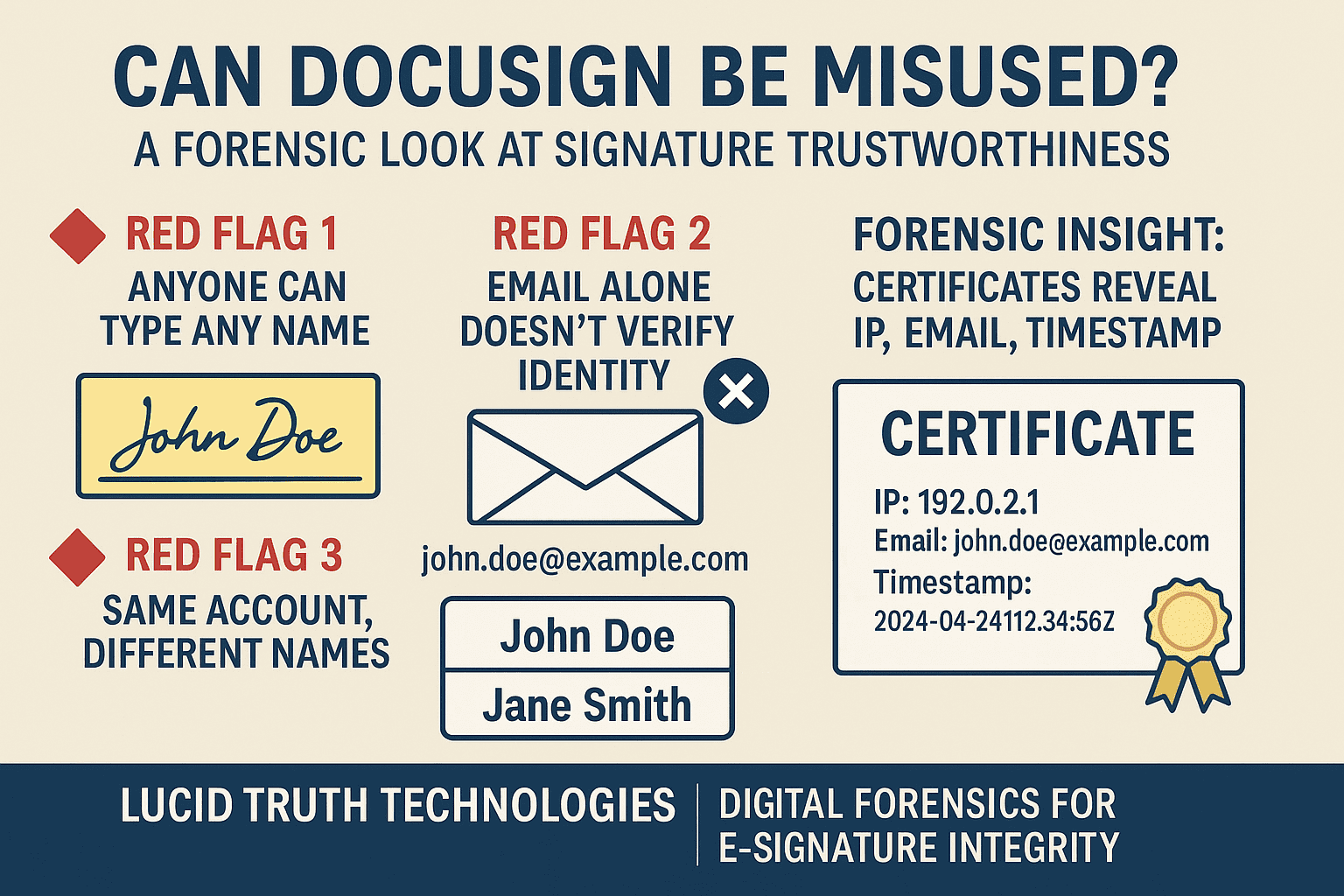

For legal professionals seeking cutting-edge solutions, Lucid Truth Technologies offers the tools and expertise needed to authenticate digital evidence and maintain trust in the justice system. Contact us to see how we can help you meet these challenges today.

[1] https://legamart.com/articles/deepfake-technology/

[3] https://www.jdsupra.com/legalnews/addressing-challenges-of-deepfakes-ai-3814741/.

[5] https://www.lawline.com/course/truth-or-tech-navigating-ai-generated-evidence-in-the-courtroom

[6] https://keymakr.com/blog/specific-legal-ai-issues-evolving-frameworks

[7] https://www.policechiefmagazine.org/law-enforcement-era-deepfakes/